Understanding Salesforce’s Einstein Trust Layer

Generative AI is revolutionizing industries by introducing new ways to enhance customer service, sales processes, and operational productivity. Despite its advantages, this swift adoption brings concerns regarding data protection, privacy, and ethical AI practices. Salesforce’s Einstein Trust Layer effectively addresses these challenges, offering a comprehensive security framework that allows businesses to adopt generative AI with confidence and trust.

What is the Einstein Trust Layer?

The Einstein Trust Layer is a groundbreaking security solution from Salesforce that safeguards sensitive information while facilitating the secure integration of generative AI technologies. It employs a series of protective mechanisms, including data masking, secure data retrieval, and zero-retention policies, ensuring that AI-driven processes remain secure and compliant with privacy regulations.

Trust in AI is more than a buzzword—it’s a necessity. Organizations rely on AI to process vast amounts of sensitive customer data. Without adequate safeguards, issues like data breaches, AI bias, and unethical data usage can arise, jeopardizing customer trust and brand reputation. The Einstein Trust Layer establishes a foundation where AI-powered tools function within a robust, ethical, and transparent security framework.

Related Read – Salesforce Einstein Trust Layer Cheat Sheet

Key Features of the Einstein Trust Layer

1. Secure Data Retrieval

At the core of the Einstein Trust Layer is secure data retrieval, a feature designed to ensure that only authorized individuals can access specific information. This is achieved through stringent Salesforce role-based access controls and granular field-level security protocols. This feature grounds AI responses in accurate, contextual data without exposing sensitive information.

2. Dynamic Grounding

Dynamic grounding enriches prompts by incorporating real-time, contextual data. This prevents AI models from generating irrelevant or misleading responses, ensuring outputs are accurate and aligned with business needs.

3. Data Masking

Sensitive information, such as personally identifiable information (PII), is automatically masked before being processed by AI. By replacing PII with placeholders, Salesforce ensures that sensitive data is never exposed to external systems.

4. Zero Retention Policy

With its zero-retention policy, the Einstein Trust Layer guarantees that no customer data is stored or used for model training by external AI providers. Once a prompt is processed, both the input and output are erased, offering unparalleled data privacy.

5. Toxicity Detection

It also evaluates AI-generated outputs to identify and mitigate inappropriate or harmful content, such as offensive language or discriminatory remarks. Responses flagged for toxicity are reviewed, ensuring communication remains professional and compliant.

6. Audit Trails

Every AI interaction is meticulously logged, creating a transparent audit trail. This includes details of prompts, responses, and user feedback, allowing organizations to track and analyze AI operations for compliance and improvement.

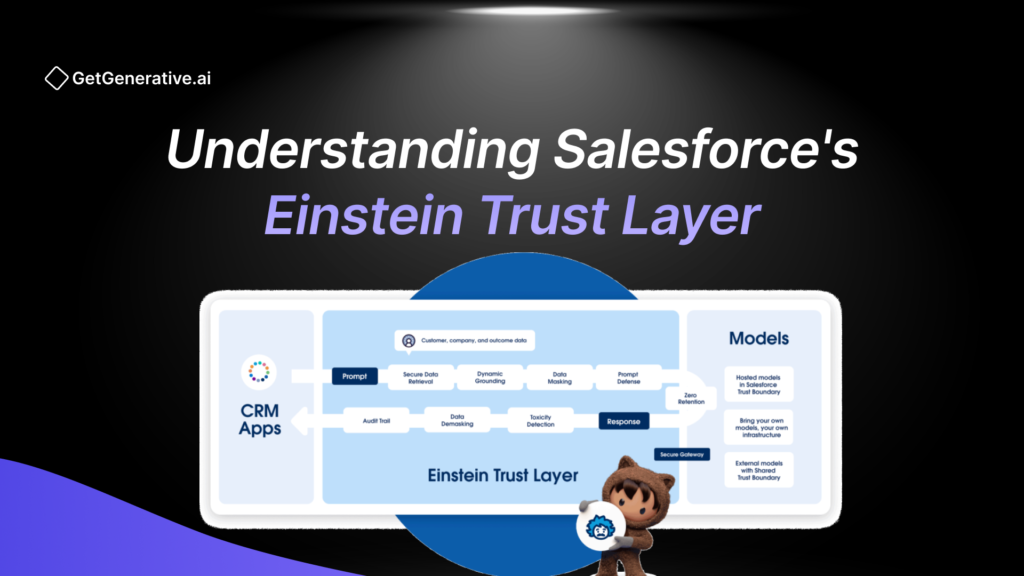

How the Einstein Trust Layer Works

The Einstein Trust Layer functions as an intermediary between Salesforce and large language models (LLMs). Here’s a step-by-step overview:

Step 1: Secure Data Retrieval

Data is securely pulled from Salesforce, respecting all user permissions and security settings. This ensures that the AI works with accurate and authorized information.

Step 2: Dynamic Grounding

AI prompts are enriched with relevant data, ensuring that responses are contextually accurate and aligned with real-time business needs.

Step 3: Data Masking

Sensitive data is masked to prevent exposure during processing, with placeholders replacing PII.

Step 4: Prompt Defense

Pre- and post-prompting instructions guide AI models to avoid generating biased, irrelevant, or harmful outputs.

Step 5: LLM Gateway

Prompts are processed through a secure LLM gateway, which ensures data encryption and enforces zero-retention policies.

Step 6: Toxicity Detection and Response Generation

Generated outputs are scanned for toxicity before being demasked and delivered to the user, ensuring safe and compliant AI interactions.

Also Read – The Ultimate Salesforce Einstein Copliot Cheat Sheet

Applications of the Einstein Trust Layer

1. Personalized Customer Interactions

Sales teams use AI-generated insights to tailor communication, ensuring relevance and enhancing customer relationships.

2. Efficient Sales Processes

AI tools, such as Einstein Copilot Studio, streamline sales operations by generating accurate, data-driven responses for lead management and customer queries.

3. Fraud Prevention

The Trust Layer enhances risk detection by securely processing sensitive data, helping organizations identify and mitigate fraudulent activities.

4. AI-Powered Automation

From automated customer support to real-time data analysis, the Einstein Trust Layer powers generative AI capabilities without compromising security.

Also Read – Key Features of Salesforce EinsteinGPT

Conclusion

The Einstein Trust Layer is a game-changer in the AI landscape, combining robust security measures with the power of generative AI. Its multi-layered approach—from secure data retrieval to dynamic grounding and audit trails—ensures businesses can embrace AI with confidence. As AI adoption grows, tools like the Einstein Trust Layer will be critical for bridging the trust gap, fostering a safer and more transparent AI-driven future.

To learn more, visit GetGenerative.ai.

FAQs

1. What makes the Einstein Trust Layer unique?

The Einstein Trust Layer combines dynamic grounding, data masking, and zero-retention policies to ensure secure, ethical, and accurate AI interactions.

2. Can businesses customize the Trust Layer?

Yes, businesses can customize security settings, define user permissions, and tailor prompts to align with their specific needs.

3. How does the Trust Layer ensure data privacy?

By masking sensitive data, enforcing zero-retention policies, and using secure gateways, the Trust Layer protects customer information at every step.

4. What industries benefit most from the Einstein Trust Layer?

Industries like finance, healthcare, and e-commerce benefit significantly by leveraging secure, AI-powered tools for data management and customer engagement.

5. How does toxicity detection work?

Toxicity detection scans AI outputs for harmful content and flags inappropriate responses, ensuring professional and compliant communication.